Often described as the “operating system for the cloud,” Kubernetes is an open source platform for managing clusters of containerized applications and services. Developed by Google engineers Joe Beda, Brendan Burns, and Craig McLuckie in 2014 and open sourced shortly thereafter, Kubernetes soon became a thriving, cloud native ecosystem in its own right. Today, Kubernetes—which means “helmsman” or “pilot” in ancient Greek—is managed by the Cloud Native Computing Foundation (CNCF), an arm of the Linux Foundation.

Kubernetes was the first graduated project for the CNCF, and it became one of the fastest growing open source projects in history. Kubernetes now has more than 2,300 contributors, and has been widely adopted by companies large and small, including half of the Fortune 100.

Learn more about Container Engine for Kubernetes

Kubernetes 101—Key terms

To begin with, here are a few key terms related to Kubernetes. There is a more exhaustive list available on the Kubernetes Standardized Glossary page. You can also leverage the Kubernetes Cheat Sheet, which contains a list of commonly used kubectl commands and flags.

Cluster

Is a set of machines individually referred to as nodes used to run containerized applications managed by Kubernetes.

Node

Is either a virtual or physical machine. A cluster consists of a master node and a number of worker nodes.

Cloud Container

Is an image that contains software and its dependencies.

Pod

Is a single container or a set of containers running on your Kubernetes cluster.

Deployment

Is an object that manages replicated applications represented by pods. Pods are deployed onto the nodes of a cluster.

Replicaset

Ensures that a specified number of pod replicas are running at one time.

Service

Describes how to access applications represented by a set of pods. Services typically describe ports and load balancers, and can be used to control internal and external access to a cluster.

Kubernetes documentation

What is KubeCon?

KubeCon is the annual conference for Kubernetes developers and users. Since the first KubeCon in 2015 with 500 attendees, KubeCon has grown to become an important event for the cloud native community. In 2019, the San Diego, California edition of KubeCon drew 12,000 developers and site reliability engineers who were celebrating the open source ecosystem blossoming around the Kubernetes cloud orchestration platform.

Read about KubeCon

What are Kubernetes Containers?

As developers increasingly deploy software for a diverse set of computing environments with different clouds, test environments, laptops, devices, operating systems, and platforms, the issue of making the software run reliably is of paramount importance. That’s where containers come in: They bundle an application with its entire runtime environment. In this sense, containers are a form of virtualization because they provide a “bubble” in which the application can run by including the correct libraries, dependencies, and operating systems. But containers are smaller than virtual machines because they contain only the resources the application needs, and nothing more.

Container registry

Kubernetes vs Docker

While Linux containers have existed since 2008, it took the emergence of Docker containers in 2013 to make them famous. Similarly, the explosion of interest in deploying containerized applications—applications that contained everything they needed to run—ultimately created a new problem: managing thousands of containers. Kubernetes automatically orchestrates the container lifecycle, distributing the containers across the hosting infrastructure. Kubernetes scales resources up or down, depending on demand. It provisions, schedules, deletes, and monitors the health of the containers.

What is Docker

What are the components of Kubernetes?

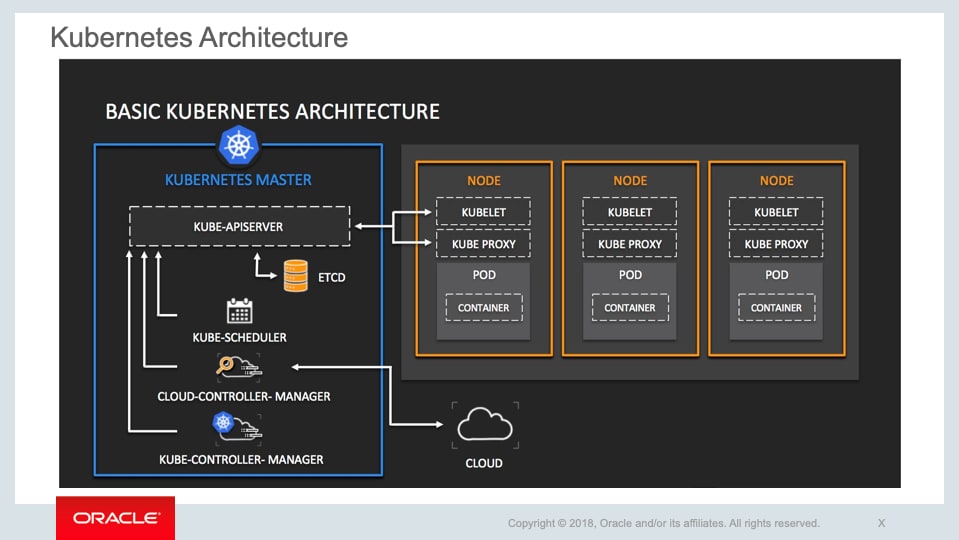

The key components of Kubernetes are clusters, nodes, and the control plane. Clusters contain nodes. Each node comprises a set of at least one worker machine. The nodes host pods that contain elements of the deployed application. The control plane manages nodes and pods in the cluster, often across many computers, for high availability.

The control plane contains the following:

- Kubernetes API server: provides the programming interface (API) for controlling Kubernetes

- etcd: a key-value store for cluster data

- Kubernetes scheduler: matches new pods to available nodes

- Kubernetes-controller-manager: runs a number of processes to manage node failure, control replication, join services and pods via endpoints, and control accounts and access tokens

- Cloud-controller-manager: helps manage APIs from specific cloud providers around such aspects as specific infrastructure routes and load balancing

The node components include:

- kubelet: an agent that checks that containers are running in a pod

- Kubernetes network proxy: maintains network rules

- Docker, containerd, or another type of container runtime

What are the benefits of Kubernetes?

With containers, you can be confident that your applications are bundled with everything they need to run. But as you add containers—which often contain microservices—you can automatically manage and distribute them using Kubernetes.

With Kubernetes, organizations can:

| Scale automatically | Dial deployments up or down, depending on demand. |

| Discover services | Find containerized services via the DNS or IP address. |

| Balance loads | Stabilize deployment by distributing network traffic. |

| Manage storage | Choose local or cloud storage. |

| Control versions | Choose the kinds of containers you want to run, and which ones to replace using a new image or container resources. |

| Maintain security | Securely update passwords, OAuth tokens, and SSH keys related to specific container images. |

What are the challenges of using Kubernetes?

While Kubernetes is highly composable and can support any type of application, it can be difficult to understand and use. Kubernetes is not always the correct solution for a given workload, as a number of CNCF members have commented on. Which is why the Kubernetes ecosystem contains a number of related cloud native tools that organizations have created to solve specific workload issues.

Kubernetes deploys containers, not source code, and does not build applications. For logging, middleware, monitoring, configuration, CI/CD, and many other production activities, you’ll need additional tools. That said, Kubernetes is extensible, and has proven to be adept for a wide variety of use cases from jet planes to machine learning. In fact, cloud vendors including Oracle, Google, Amazon Web Services, and others have used Kubernetes’ own extensibility to build managed Kubernetes, which are services that reduce complexity and increase developer productivity.

What is managed Kubernetes?

Our Cloud Infrastructure Container Engine for Kubernetes is a developer-friendly, managed service that you can use to deploy your containerized applications to the cloud. Use Container Engine for Kubernetes when your development team wants to reliably build, deploy, and manage cloud native applications. You specify the compute resources that your applications require, and Container Engine for Kubernetes provisions them within an existing Cloud Infrastructure tenancy.

While you don’t need to use a managed Kubernetes service, our Cloud Infrastructure Container Engine for Kubernetes is an easy way to run highly available clusters with the control, security, and predictable performance of Oracle Cloud Infrastructure. Container Engine for Kubernetes supports both bare metal and virtual machines as nodes, and is certified as conformant by the CNCF. You also get all Kubernetes updates and stay compatible with the CNCF ecosystem without any extra work on your part.

Learn how to use managed Kubernetes clusters

The Kubernetes ecosystem and community

Oracle is a Platinum member of the Cloud Native Computing Foundation (CNCF), an open source community that supports several dozen software development projects organized by maturity level. The graduated projects

How to get started with Kubernetes

Kubernetes has a large ecosystem of supporting projects that have sprung up around it. The landscape can be daunting, and looking for answers to simple questions can lead you down a rabbit hole. But the first few steps down this path are simple, and from there you can explore advanced concepts as your needs dictate. Learn how to:

- Set up a local development environment with Docker and Kubernetes

- Create a simple Java microservice with Helidon

- Build the microservice into a container image with Docker

- Deploy the microservice on a local Kubernetes cluster

- Scale the microservice up and down on the cluster